Introduction

This is our updated portal for open-access to outcomes of our research projects. Here, we provide the codes, datasets, models, and pre-trained weights for our research projects. This page is to be used as a replacement for the old code and database distribution pages.

The research projects are categorized into the following research areas:

- Image Quality Assessment

- Video Quality Assessment

- Image Restoration/Enhancement

- Video Restoration/Enhancement

- Image Degradation Modeling

- 3D Mesh Quality Assessment

- Dense Prediction

- Network Architecture Search

Image Quality Assessment

FocusLiteNN: High Efficiency Focus Quality Assessment for Digital Pathology

We propose a highly efficient CNN-based model FocusLiteNN that only has 148 paramters for Focus Quality Assessment. It maintains impressive performance and is 100x faster than ResNet50. We introduce a comprehensive annotated dataset TCGA@Focus, which contains 14371 pathological images with in/out focus labels.Unifying Image Quality Assessment Datasets

Unsupervised Score Fusion by Deep Maximum a Posteriori Estimation

The proposed model conducts fine-grained uncertainty estimation at the score level to increase the accuracy and reduce the uncertainty in fused predictions.

Quantifying Visual Image Quality: A Bayesian View

We discuss the implications of the successes and limitations of modern IQA methods for biological vision and the prospect for vision science to inform the design of future artificial vision systems. To facilitate future research in this direction, we have created a unified interface for downloading and loading 19 popular IQA datasets. We provide codes for both general Python and PyTorch.Loader

Z. Duanmu, W. Liu, Z. Wang, Z. Wang, "Quantifying Visual Image Quality: A Bayesian View," Annual Review of Vision Science, 2021.

TMQI: Tone Mapped Image Quality Index

Tone mapping operators (TMOs) that convert high dynamic range (HDR) to low dynamic range (LDR) images provide practically useful tools for the visualization of HDR images on standard LDR displays. Tone Mapped image Quality Index (TMQI) is an objective quality assessment algorithm for tone mapped images.

3D Image Quality Assessment

Project: Models and evaluation protocols for predicting stereoscopic 3D image quality under symmetric/asymmetric distortions, including single-view-based predictors and a 2D-to-3D quality prediction model.

Dataset: Phase I and II created from Middlebury Stereo images with three distortions (AWGN, blur, JPEG) at four levels and symmetric/asymmetric combinations; 78/130 single-view and 330/460 stereo images with subjective scores.

Evaluation of Dehaze Performance

Project: Perceptual benchmarking study of single-image dehazing, with a carefully designed human study and analysis to compare 8 algorithms and reveal perceptual artifacts and trade-offs.

Dataset: 25 hazy images (22 real, 3 simulated); 8 dehazing outputs per image, totaling 225 images with MOS from 24 subjects.

Waterloo IVC Multi-Exposure Image Fusion

Project: Perceptual quality assessment for multi-exposure image fusion, proposing an evaluation methodology and analysis to compare fusion operators and guide algorithm design.

Dataset: 17 source sequences, 8 fusion algorithms, 136 fused images with MOS on a 1–10 scale.

Quality Assessment of Interpolated Natural Images

Project: Objective assessment of interpolation quality across scales and algorithms, studying perceptual factors and developing evaluation methods for upsampling artifacts.

Dataset: 13 image sets downsampled by factors 2/4/8; eight interpolation algorithms per set; 30 observers’ ranking-based MOS.

SSIM Family

SSIM: Structural Similarity Index

The Structural SIMilarity (SSIM) index is a method for measuring the similarity between two images. The SSIM index can be viewed as a quality measure of one of the images being compared, provided the other image is regarded as of perfect quality. It is an improved version of the universal image quality index proposed before.

Maximum Differentiation (MAD) Competition

MAD competition is an efficient methodology for comparing computational models of a perceptually discriminable quantity. Rather than pre-selecting test stimuli for subjective evaluation and comparison to the models, stimuli are synthesized so as to optimally distinguish the models.

Universal Image Quality Index

The universal objective image quality index, which is easy to calculate and applicable to various image processing applications. Instead of using traditional error summation methods, the proposed index is designed by modeling any image distortion as a combination of three factors: loss of correlation, luminance distortion, and contrast distortion.

Z. Wang and E. P. Simoncelli, "Maximum differentiation (MAD) competition: A methodology for comparing computational models of perceptual discriminability," Journal of Vision, vol. 8, no. 12, pp. 1-13, Sept. 2008.

Zhou Wang and Qiang Li, "Information Content Weighting for Perceptual Image Quality Assessment," IEEE Transactions on Image Processing, vol. 20, no. 5, pp. 1185-1198, May 2011.

Z. Wang, E. P. Simoncelli and A. C. Bovik, "Multi-scale structural similarity for image quality assessment," IEEE Asilomar Conference on Signals, Systems and Computers, Nov. 2003.

Zhou Wang and Alan C. Bovik, "A Universal Image Quality Index," IEEE Signal Processing Letters, vol. 9, no. 3, pp. 81-84, March 2002.

Reduced-Reference Image Quality Assessment

Reduced-reference image quality assessment predicts visual quality of distorted images using only partial information about reference images, bridging the gap between full-reference and no-reference methods. The proposed method uses Kullback-Leibler distance between marginal probability distributions of wavelet coefficients, with a generalized Gaussian model to efficiently summarize reference image statistics.There are some more image and video quality-related demons provided at Prof. Wang’s research page:

- SSIMplus index for video quality-of-experience assessment

- Foveated scalable image coding

- Human vision foveation model

- Improved JPEG2000 ROI coding

Video Quality Assessment

4K Video Quality Assessment

Project: Comparative study of modern codecs (H.264/AVC, HEVC, VP9, AVS2, AV1) on 4K content across resolutions, analyzing coding efficiency and perceptual quality.

Dataset: 20 4K sources encoded by 5 encoders at three resolutions and four distortion levels (total 1200 videos), with provided scores and bitstreams.

Image Restoration/Enhancement

Deep Image Debanding

Banding or false contour is an annoying visual artifact whose impact negatively degrades the perceptual quality of visual content. In this work, we construct a large-scale dataset of 51,490 pairs of corresponding pristine and banded image patches, which enables us to make one of the first attempts at developing a deep learning based banding artifact removal method for images that we name deep debanding network (deepDeband). We also develop a bilateral weighting scheme that fuses patch-level debanding results to full-size images.Video Restoration/Enhancement

Video Denoising Based on Spatiotemporal Gaussian Scale Mixture Model

Video denoising employs Gaussian Scale Mixture modeling to capture correlations in 3D blocks of wavelet coefficients across space, scale, orientation, and time. The method incorporates noise-robust motion estimation to enhance temporal correlations between wavelet coefficients, followed by Bayesian least square estimation for competitive denoising performance.G. Varghese and Z. Wang, "Video denoising using a spatiotemporal statistical model of wavelet coefficients," IEEE International Conference on Acoustics, Speech, & Signal Processing, Las Vegas, Nevada, Mar. 30 - Apr. 4 2008.

Image Degradation Modeling

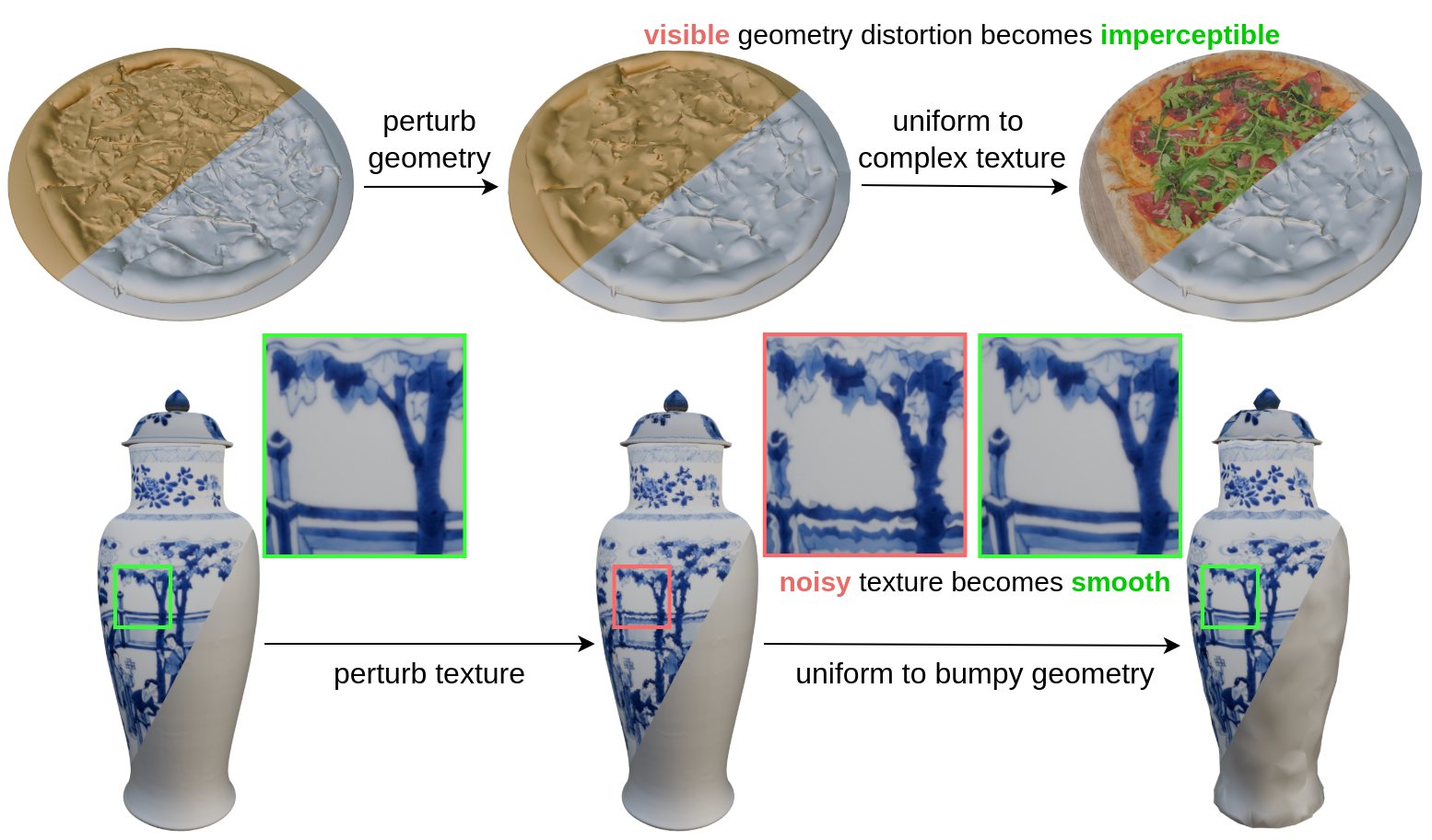

Towards a Universal Image Degradation Model via Content-Degradation Disentanglement

Image degradation synthesis and modeling is highly desirable in a wide variety of applications ranging from image restoration to simulating artistic effects. The design of existing degradation models requires very strong domain knowledge and cannot be easily adapted to other applications. We propose a universal image degradation model that can be easily adapted to different applications, which can significantly reduce the burden effort in designing degradation models.3D Mesh Quality Assessment

HybridMQA: Hybrid Machine-Human Framework for Objective and Subjective Medical Image Quality Assessment

HybridMQA is a first-of-its-kind hybrid full-reference colored MQA framework that integrates model-based and projection-based approaches. It captures complex interactions between textural information and 3D structures for enriched quality representations. Our method employs graph learning to extract detailed 3D representations, which are then projected to 2D using a novel feature rendering process that precisely aligns them with colored projections. This enables the exploration of geometry-texture interactions via cross-attention, producing comprehensive mesh quality representations.

Perceptual Crack Detection for Rendered 3D Textured Meshes

We make one of the first attempts to propose a novel Perceptual Crack Detection (PCD) method for detecting and localizing crack artifacts in rendered meshes. Specifically, motivated by the characteristics of the human visual system (HVS), we adopt contrast and Laplacian measurement modules to characterize crack artifacts and differentiate them from other undesired artifacts.Dense Prediction

Relationship Spatialization for Depth Estimation

Relationship spatialization identifies and quantifies how inter-object spatial relationships contribute useful spatial priors for monocular depth estimation. The proposed learning-based framework extracts, spatially aligns, and adaptively weights relationship representations to enhance existing depth estimation models across multiple datasets.Network Architecture Search